This report will share our Developer Productivity Engineering (DPE) Journey at Henry, Inc. Our server-side Kotlin project used to take around 20 mins to complete a pre-merge build. It discouraged engineers from adding more automated tests. We implemented several solutions including Develocity, and now pre-merge builds are completed within 8 mins.

About us

Henry, Inc. is developing products with the mission of "keep solving social issues to make a brighter world". As a first step, we are currently developing "Henry" a cloud-based electronic medical record and receipt system, which is a core system for small to medium-sized hospitals.

Our engineering team has ~20 engineers developing server-side Kotlin. We are in the phase to expand team size and quality of services, and it motivates us to improve developer productivity and shorten Lead Time to Change while adding more automated test cases.

DPE barriers in our project

Our Gradle project is a monolith which contains ~10 subprojects. We applied remote build cache with the help of tehlers/gradle-gcs-build-cache, and GitHub Actions cache with the help of setup-gradle action. Some of our engineers understood how to improve build performance with Gradle, so we believe that we did our best to improve DPE, but we still had many barriers:

- Kotlin compilation took much time. In our case, the K2 compiler does not shorten compilation time. Usually compilation took 3 to 5 mins to compile.

- The unit tests written in Kotest took 13 to 15 mins.

Deployment workflow has one more barrier, database migration processes (ridgepole and Algolia) which take 10~ mins. So our Lead Time to Change usually takes more than 35 mins.

The cause of our slow tests

By historical reasons, our test cases tightly depend on datastore (Postgres), and do not sufficiently account for conflicts between them. It means that one test case could fail due to changes made by other test cases, so we are forced to run test cases in serial. We could not run tests with maxParallelForks option, even though we used a large runner that had 8 cores and 16 GiB memory to launch on-memory Postgres instance for performance.

Why our compilation takes time

Our Gradle project is monolith, but it is not well separated yet. Last year we merged two Git repositories into one, and reorganization is still in progress. So each subproject is still huge and cannot use build caches efficiently. Here is a graph that explains dependencies among subprojects roughly:

graph BT

master-data --> shared

general-api --> master-data

receipt-api --> master-data

batches --> general-api

Considered solutions

Testcontainers

It is too costly to rewrite existing test cases to keep isolated from other test cases, so we tried to use process-local database to solve the problem. Testcontainers is a suitable solution for us, which lets us launch Postgres database for each test fork. It resolves conflicts between test forks by using an isolated Postgres database for each fork. Testcontainers also makes it possible to run test remotely; not only on GitHub Actions runner but also on Test Distribution Agents hosted by Develocity.

Parallelize data migrations

We run data migrations in parallel, with the help of build matrix. This is easy to maintain, and visualizes the progress of migration. However, it is costly and slow. In total, our workflow took 150 mins/build (cumulative CPU time) and a quarter of it comes from data migration.

We unify all data migrations into one Gradle task, and run it on one Runner. It enables us to run multiple data migrations in one process with the help of coroutines, so we could run migration in shorter time with less computing resource.

Subproject separation

To run Gradle builds in parallel, it is really effective to separate project into multiple subprojects. While we wanted to split the monolithic Gradle project into smaller subprojects to enhance build parallelism, our codebase is still in the process of reorganization. Thus, we had to defer this effort for now.

…and Develocity!

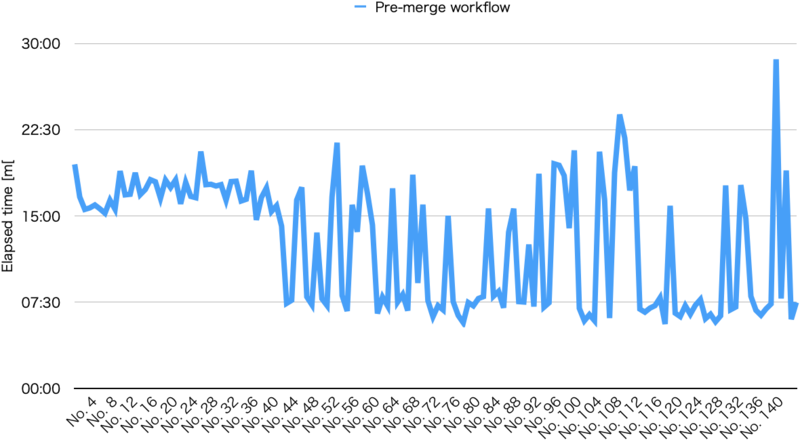

We expected that Develocity can shorten test execution time by Test Distribution Agents. After our verification, we found that it surely halved test execution time and whole pre-merge. You can find that builds with Develocity Test Distribution Agent (No.40 and later) finished in shorter time:

In this POC we have only one Test Distribution Agent host, so sometimes Gradle build cannot run tests remotely due to conflict among build processes. We hope that auto-scaling feature will stabilize the performance. According to the Predictive Test Selection (PTS) simulator, PTS can skip about 20% of test cases, then it also contributes shortening pre-merge build time.

Lessons learned, and our next challenge

In this challenge, we have confirmed that we halved our pre-merge build time with help from Testcontainers and Test Distribution Agents of Develocity. This is really huge contribution to keep changes on our service frequent. It still has several areas for improvement, such as auto-scaling feature of Test Distribution Agents, but is already one of the important parts of our development workflow.

| Legacy Builds (serial test exec) | New Builds (parallel test exec) | New Builds w/ Test Distribution Agents | |

|---|---|---|---|

| whole pre-merge workflow | 20 mins | 15 mins | 8 mins |

| compile tasks | 3~5 mins | 3~5 mins | 3~5 mins |

| test tasks | 13~15 mins | 8~10 mins | 7~8 mins |

However, post-merge build including data migration still takes time. We'll keep working on subproject separation for better parallelism, and reduce time to run data migration.